Following the construction of Circuit-bent jigsaw #2, I had a very interesting chat with Cognitive Scientist Tom Stafford, who pointed out how the piece draws out the underlying statistics of the scenes commonly framed by pictures:

“By rearranging the jigsaws, you destroy the meaningful interpretation of the scene, but continue sampling from the same underlying distribution (which is, as a consequence, highlighted, since the coherent interpretation of a scene is no longer there to distract)”.

He put me on to this research which shows that “scenes”, whilst they may contain different subject matter, generally adhere to a set of principles of composition which are revealed in Circuit-bent jigsaw:

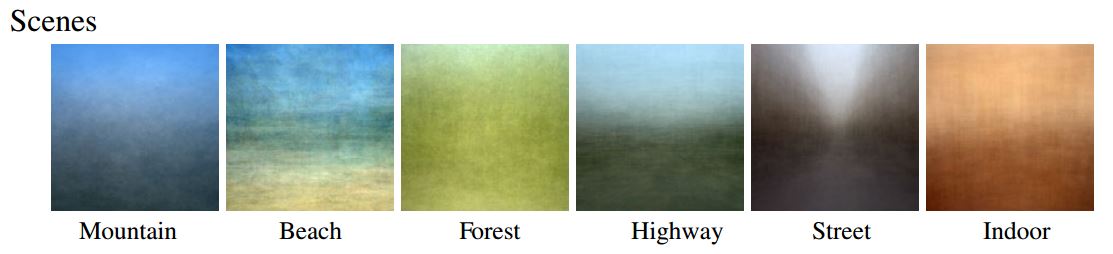

Images from Torralba, A., & Oliva, A. (2003). Statistics of natural image categories. Network: Computations in Neural Systems, 14, 391–412-391–412.

The above images were generated by compiling hundreds of images which conformed to each of the broad subject categories. Though there are variations between natural and man-made environments, scenes are generally lighter at the top, conveying the light source of most environments whether natural or otherwise. They are also constrained by human perspective and as a result are usually viewed from a human standing height. Scenes which involve greater distances tend to be anchored on the horizon and feature smoother textures as visual “clutter” decreases.

These principles can be seen in the Circuit-bent jigsaws, with lighter shades appearing at the top of the images, and the viewer’s eye drawn in to the central, distant point. Though the individual units of the scene have been stripped of their semantic or contextual attributes, they still adhere to a set of predetermined rules, which are retained in the randomised images.